Research Article

Creative Commons, CC-BY

Creative Commons, CC-BY

Direct Observation of Procedural Skills (DOPS): A Workplace-Based Assessment (WPBA) In Health Professions Education and Practice

*Corresponding author: Vasudeva Rao Avupati, Department of Pharmaceutical Chemistry, School of Pharmacy, E-Learning Lead, Open and Distance Learning (ODL) Expert, School of Pharmacy, IMU University, Malaysia.

Received: October 24, 2024; Published: October 28, 2024

DOI: 10.34297/AJBSR.2024.24.003218

Abstract

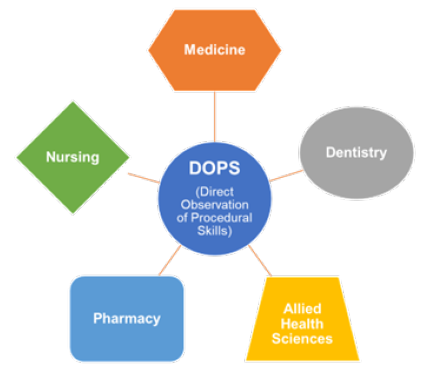

Direct Observation of Procedural Skills (DOPS) is a widely used assessment technique in health professions education, measuring students’ proficiency with clinical procedures. It is effective in fostering skill acquisition, enhancing feedback, and ensuring patient safety. DOPS consists of structured assessment, real-time observation, immediate feedback, and a standardized evaluation process. It offers several benefits for health profession education, including authentic assessment, formative assessment, standardized evaluation, and a focus on patient safety. However, DOPS has its challenges, including resource-intensive implementation, inter-rater reliability, feedback subjectivity, and integration with the curriculum. It requires sufficient resources, such as qualified assessors, time allotments, and appropriate clinical settings. To minimize inter-rater variability, assessors should participate in adequate training and calibration sessions. Additionally, feedback subjectivity can be addressed by providing precise instructions and rubrics and conducting regular feedback training to improve consistency. Overall, DOPS is a valuable tool for health professions education, providing a standardized and accurate way to evaluate students’ procedural skills. In this review, I discussed how DOPS supports the growth of skilled healthcare professionals across various disciplines, including medicine, dentistry, pharmacy, nursing, and allied health sciences, which includes an evidence-based discussion on reliability, validity, educational impact, acceptance, feasibility, and fidelity, respectively.

Keywords: Direct Observation of Procedural Skills (DOPS), Medicine, Dentistry, Pharmacy, Nursing, Allied health sciences

Introduction

The assessment of clinical competence among various healthcare professionals (physicians, nurses, pharmacists, dentists and others) at the workplace emphasizes the importance of adapting Miller's framework [1] into Health Professions Education (HPE) and practice. Assessing a candidate's actual professional practice in the workplace is done through Workplace-Based Assessment (WPBA), which utilizes various assessment tools [2]. Traditional assessment methods are having limitations to evaluate a trainees’ actual practice, which is why there is a growing interest in an authentic WPBA [3]. WPBA can be categorized into two groups: observation-based and evidence accumulation-based [4]. Observation-based WPBA includes single encounter assessments such as Mini-Clinical Evaluation Exercise (miniCEX), Case-Based Discussion (CbD), Direct Observation of Procedural Skills (DOPS), and multiple observation assessments such as peer assessment and patient surveys, which are also known as Multisource Feedback (MSF). Evidence accumulation-based WPBA includes logbooks and portfolios [5]. DOPS is a systematic method of WPBA which captivated the interest of healthcare professionals and practitioners, where a supervisor observes a trainee performing a task in their everyday work environment and provides feedback of their performance [6]. It is a valuable tool for assessing practical skills and competencies in health professions [7]. It provides learners with immediate feedback of their performance, enhances learning and growth, and helps them identify their strengths and weaknesses [8]. However, it has some limitations and challenges that should be considered when implementing it [9]. To ensure its effective implementation, adequate training and resources should be provided to both clinical supervisors and learners, clear and specific assessment criteria should be developed, and DOPS assessments should be integrated into HPE programs [10].

Methodology

Initially I have selected DOPS as an assessment tool, after that I applied a systematic search strategy to look for the scope of DOPS and its application in health professions practice. I comprehensively used a set of terms ‘‘DOPS OR Direct Observation of Procedural Skills”, “DOPS AND Medicine OR Dental OR Nursing OR Pharmacy” to search for literature. Studies were retrieved from selected databases of scientific literature such as Elsevier, Springer, Google Scholar, PubMed, and Scopus respectively. The content presented covers HPE disciplines (medicine, dentistry, nursing, pharmacy, and allied health sciences) and used DOPS assessment tool. The studies were arranged in a chronological order and considered for the reviewing study findings. All duplicate studies were eliminated, and the remainder number of article titles were further selected based on the content of the abstracts and organised the full-text available articles into relevant disciplines (Figure 1).

Evidence-based discussion

Based on the above literature search, I have critically reviewed the effectiveness of the WPBA assessment tool can be verified based on the structured criteria of Utility index proposed by van der Vleuten, the criteria include reliability to check the consistency and reproducibility (Cronbach's alpha > 0. 70), validity is to determine the accuracy of the intended measurement, educational impact to assess the effect on students learning, acceptability determines the considerations from different stake holders, feasibility is to understand the practicality, fidelity measures degree of usability [11]. The summary of the selected studies under each criterion was discussed in this assignment.

Medical Education

The residents' knowledge and surgical skills significantly improved after the training program. DOPS assessment evaluated Postpartum Tubal Sterilization (PPTS) training program efficacy in Ob/Gyn residents with a minilaparotomy approach, an Intraclass Correlation Coefficient (ICC) of given DOPS score between two experts reported as 0.834 indicating its reliability [12]. DOPS supported colonoscopy competency assessment tool across 279 training units, 10,749 DOPS for 1,199 trainees were created an acceptable reliability threshold (G>0.7) was achieved with three assessors [13]. Formative gastroscopy DOPS assessments of 987 trainees under three distinct groups “pre-procedure”, “technical”, and “post-procedure non-technical”, the combination of assessments from three assessors achieved reliability threshold of generalisability coefficients (G>0.7). This study suggests that DOPS assessment should be incorporated into the training of gastroscopy trainees to improve their performance and ensure patient safety [14]. A study conducted on 37 medical students from European Universities explored the usability of DOPS to assess the laparoscopic skills. The Cronbach's alpha was 0.819 for the total of the 37 trainees and 0.806 and 0.839 for the A- and B- subgroups, respectively, and the reliability scores were interpreted as well accepted (>0.8) [15]. DOPS also used to grade trainees performing ultrasound-guided regional anesthesia (UGRA), and each anesthetist performed four UGRA procedures that were assessed by two independent raters using the DOPS assessment tool. The inter-rater reliability of the DOPS assessment tool for UGRA was found to be excellent, with an ICC of 0.91 (95% confidence interval [CI]: 0.85-0.95). The intra-rater reliability of the DOPS assessment tool was also found to be excellent, with ICCs ranging from 0.88 to 0.95 (95% CI: 0.77-0.98). The use of this tool may help to improve the quality and safety of UGRA procedures by identifying areas for improvement in anesthetists’ procedural skills. While the assessment has good test-retest reliability (ICC=0.85), scores correlated only modestly with performance of colonoscopy in the clinical setting, and assessors were not blinded to endoscopists' skill level [16]. The Joint Advisory Committee on GI Endoscopy's Direct Observation of Procedure (JAG-DOPS) Assessment Too has been formally integrated into training and credentialing guidelines in the United Kingdom for the competency assessment of colonoscopy skills. The tool has achieved reliability in various study designs such as 2 cases and 2 assessors (G=0.81), 1 case and 1 assessor (G=0.65) and 3 cases and 4 assessors (G=0.90) respectively [17]. Another study supported the use of DOPS as one of the reliable assessment tools for the high-stake assessment of gastrointestinal endoscopy competency due to its high relative reliability G=0.81 [18].

DOPS scores significantly improved neonatal procedural skills [19]. DOPS validated for measuring the effectiveness of a simulation-based workshop in improving advanced neonatal procedural skills. The validity and reliability of the DOPS in audiology showed good content validity, the ICC for inter-rater reliability was 0.88, and the ICC for intra-rater reliability was 0.92. The study findings suggest that the DOPS tool is a valid and reliable assessment tool for evaluating procedural skills in audiology [20]. The excellent inter-rater reliability (weighted kappa=0.93) and high internal consistency (Cronbach's alpha=0.93) of DOPS concluded that it is a useful method for evaluating the competency of trainees in pediatric colonoscopy [21]. The DOPS tool was adapted to suit the specific procedures performed by podiatrists. Inter-rater reliability was established by comparing the scores of two independent assessors. The study concluded that the adapted DOPS tool is a feasible and reliable tool for assessing procedural skills in podiatry [22]. DOPS assessments have the potential to be a valuable tool in the training of histopathology trainees, but the effective implementation of this assessment method requires careful consideration of the challenges and barriers identified by trainers such as clear assessment criteria and guidelines, the use of standardized assessment forms, and the provision of regular feedback to trainees [23]. The DOPS tool is a valuable addition to the training and assessment of trainees in flexible sigmoidoscopy, providing more accurate and objective assessments of trainees' performance, which could lead to better competence outcomes [24]. Students who received DOPS had significantly higher accuracy in measuring prostate volume compared to those who did not. This suggests that DOPS is an effective method to improve the accuracy of students' measurement of prostate volume, as well as their ability to predict treatment outcomes [25]. This prospective study evaluated the effectiveness of teaching ultrasound to medical students during their undergraduate medical education using a curriculum that follows the European Federation of Societies for Ultrasound in Medicine and Biology (EFSUMB) standards [26]. The study was conducted in a medical school in Germany, with 156 medical students enrolled. The results showed that the students' knowledge and skills in ultrasound significantly improved after attending the course, with an overall mean satisfaction score of 4.6 out of 5. The study concluded that incorporating ultrasound into the undergraduate medical curriculum using a curriculum that follows the EFSUMB standards is an effective way to teach medical students about ultrasound and is well-received by the students [26].

Study shows that the incorporation of VLE-based WBA in postgraduate chemical pathology residency programs supported with DOPS assessment is an effective way to foster teaching and learning and improve residents' knowledge and skills [27]. The findings suggest that DOPS is a useful assessment tool for postgraduate training in urology, providing a structured and objective way of assessing procedural skills, which can help identify areas where trainees need further training. It is recommended that DOPS should be incorporated into postgraduate training programs in urology [28]. A study by investigated the reliability of a portfolio of workplace-based assessments in anesthesia training, the result found to be consistent [5]. DOPS test is a reliable and valid tool for evaluating the procedural skills of orthopedic residents and can be used to improve their skills and prepare them for clinical practice [6]. The study concluded that DOPS is a feasible, reliable, and valid assessment tool in surgery, and recommends the implementation of DOPS in surgical training programs to improve the quality of surgical training and enhance patient safety [29]. Results showed that the DOPS was found to be a useful tool for assessing trainees' professional development and patient care, helping identify areas for improvement and providing opportunities for feedback and reflection. It has been well-received by trainees and supervisors and has been found to be effective in improving patient care [30]. This study explored the perceptions of medical students on the use of the Direct Observation of Procedural Skills (DOPS) assessment tool in the clinical setting. The study involved focus group discussions with medical students from two different medical schools, audio-recorded, transcribed, and analyzed thematically. The results of the study revealed that medical students had a good understanding of the DOPS assessment tool, perceived it as beneficial for their learning and development, and were generally confident in their ability to perform procedural skills. However, some students expressed concerns about the potential for bias and subjectivity in the assessment process. The authors suggested further research could be conducted to address these concerns and explore the use of the DOPS assessment tool in other healthcare professions [31].

DOPS well accepted for assessing procedural skills of medical trainees in different specialties [32]. This pilot study findings suggest that the DOPS was a feasible and practical tool for assessing surgical skills in the General Surgery discipline [33]. DOPS is an effective training tool in ORL training and can help improve trainees' confidence and performance [34]. The use of DOPS as formative tests in head and neck ultrasound education is feasible and well-received by medical students and residents. However, the limitations of DOPS as formative tests should be addressed to maximize its potential [35]. The study concluded that DOPS is an effective assessment tool for evaluating procedural skills in anaesthesiology postgraduate students and recommends the use of DOPS in conjunction with other assessment tools for a comprehensive evaluation of students' performance [7]. In a study residents and faculty members perceived DOPS to be a useful formative assessment tool, but there were some barriers to implementation, such as time constraints and a lack of resources. Additionally, some faculty members expressed concerns about the potential misuse of DOPS. Overall, residents felt that DOPS had a positive impact on their learning and skill development [36]. A study conducted on ophthalmology interns 12 clinical procedures commonly performed by ophthalmology interns and was used to assess the procedural skills of 30 ophthalmology interns. The internal consistency of the DOPS tool was found to be high. It can be used to provide objective feedback to the interns, identify areas for improvement, and assess the effectiveness of the internship program [37].

Study revealed several key factors that influence the fidelity of DOPS, including trainee characteristics, supervisor characteristics, workplace culture, and organizational factors. The study’s theoretical model provides a framework for understanding the complex relationships between different factors that impact the implementation of DOPS [38].

Dental Education

Due to its high level of inter-rater reliability, a study on 36 postgraduate students and 6 faculty members in periodontology and implantology concluded that DOPS is a reliable and efficient assessment tool for the assessment. The results of the current study suggest that DOPS is a suitable and useful tool in periodontology and implantology. Students exhibit a marked improvement in their clinical abilities with the corresponding encounters, and prompt faculty feedback will enable them to improve their abilities. Our study demonstrates that DOPS is an effective and promising method for assessing clinical competencies. [39]. Peer DOPS, a validated version of the assessment form has been selected as a peer assessment protocol to assess the clinical performance of the peer and faculty and also estimated the educational impact. Results revealed that the preliminary evidence that dental students’ assessment ability of their peers can be fostered and become closer to that of experienced faculty assessment with practice and assessment-specific instruction [40]. An 8-years of data analysis on postgraduate dental trainees to understand the effectiveness of the existing assessment methods for complicated tooth extraction competency. The results revealed that the existing assessment methods are effective, but there is still room for improvement, particularly in providing more detailed feedback to trainees who fail the assessments [41]. The inclusion of DOPS in the in-training evaluation of undergraduate dental students specializing in periodontia seems to have good acceptability and feasibility. Its usefulness will be increased by faculty development in observation and feedback. In a pilot study on dental students showed that DOPS was feasible and acceptable as an assessment tool for dental students in India, with both students and faculty members reporting positive experiences with DOPS. However, there is a weakness identified included the need for more training on how to use DOPS and the need to standardize the assessment criteria. The pilot study demonstrated that DOPS is a feasible and acceptable assessment tool for dental students in India, but further research is needed to determine its effectiveness in improving the quality of dental education and to identify strategies for addressing the identified weaknesses [42]. A quasi-experimental design with pre-test and post-test measures revealed that teaching orthodontic procedural skills to BDS students using DOPS is more efficient than teaching procedural skills the conventional way. Thirty BDS (Bachelor of Dental Surgery) students were split into the experimental group and the control group at random. The outcomes revealed that DOPS implementation significantly improved the experimental group's orthodontic abilities. [43].

Nursing Education

DOPS used as an assessment tool to assess the perception of nursing students regarding DOPS, results showed that the use of DOPS can improve the performance of nursing students and help them identify their strengths and weaknesses. The implementation of DOPS requires careful planning and training of the assessors [44]. Significant improvement in the procedural skills of nursing students after the DOPS intervention was observed in a study. The perception of nursing students regarding DOPS was positive, and they considered it an effective tool for improving their procedural skills. The satisfaction of nursing faculty with DOPS suggests that it is a reliable tool for evaluating procedural skills. It is recommended that nursing schools incorporate DOPS into their curriculum to enhance the quality of nursing education [45]. Study results showed that nursing students who received DOPS had significantly improved clinical skills, increased confidence in performing clinical procedures, and increased satisfaction with their clinical placement experience compared to the control group. The use of DOPS should be considered as a standard part of nursing education programs to improve clinical skills, confidence, and satisfaction among nursing students. This could lead to improved patient care outcomes and increased retention and recruitment of nursing students [46]. The findings suggest that formative evaluation using the DOPS method can effectively enhance the practical skills of nursing students in the ICU. Educators and instructors can utilize the DOPS method to evaluate students' skills and provide feedback to improve their skills during their learning process [47].

Pharmacy Education

A study conducted to assess the influence of DOPS as a valid and reliable tool for assessing procedural skills in pharmacy students, establish content and face validity of the rubric, and evaluate its inter-rater reliability. The rubric was then piloted on a group of fourth-year pharmacy students in ambulatory care rotations, and inter-rater reliability was assessed using a kappa statistic. Results confirmed that the rubric was found to be a valid and reliable tool for assessing procedural skills in pharmacy students and may be useful in identifying areas where students require additional training and in evaluating the effectiveness of training programs [48].

Allied Health Sciences Education

Microbiology

DOPS used as an assessment tool among postgraduate students in medical microbiology, its reliability, validity, feasibility, and acceptability. DOPS was a valid and reliable tool for assessing procedural skills in medical microbiology, and the mean scores obtained using DOPS were significantly correlated with the scores obtained using the checklist [49].

Radiology

The study highlights the importance of DOPS assessment method in radiology education, but its implementation requires more time and resources. Strategies should be developed to integrate this method into the radiology education curriculum [50].

Midwifery

In a study design, midwifery students were more satisfied with DOPS than with the current methods (n=100, 76.7 ± 12.9 and 62.6 ± 14.7). The study concluded that DOPS is a more effective and satisfactory method for evaluating midwifery students' skills compared to the current methods (P<0.000), and it is recommended that this method be used more widely in midwifery education [51].

Summary

In summary, DOPS has been recognized as a reliable assessment tool by various stake holders across HPE academic programmes, well accepted for conducting both formative and summative evaluations. Nevertheless, very limited evidence reported with respect to the use and implementation of DOPS assessment in pharmacy and other allied health sciences education.

Funding

This work did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Conflict of Interest Statement

Author declares that there is no conflict of interest.

Ethical Approval and Author contribution

Author declares that there is no ethical issue.

Acknowledgement

The author, Dr. Vasudeva Rao Avupati is thankful to the IMU senior and academic management for providing the facilities to complete this review study.

References

- B W Williams, P D Byrne, D Welindt, M V Williams (2016) Miller’s pyramid and core competency assessment: a study in relationship construct validity. Journal of Continuing Education in the Health Professions 36(4): 295-299.

- V J Marsick, K Watkins (2015) Approaches to studying learning in the workplace in Learning in the workplace, Routledge 171-198.

- K H Koh (2017) Authentic assessment. in Oxford research encyclopedia of education.

- ME McBride, MD Adler, WC McGaghie (2019) Workplace-based assessment, in Assessment in health professions education. Routledge 160-172.

- D J Castanelli, JMW Moonen van Loon, B Jolly, J M Weller (2019) The reliability of a portfolio of workplace-based assessments in anesthesia training. Can J Anesth/J Can Anesth 66(2): 193-200.

- A Amini, F Shirzad, M A Mohseni, A Sadeghpour, A Elmi (2016) Designing Direct Observation of Procedural Skills (DOPS) Test for Selective Skills of Orthopedic Residents and Evaluating Its Effects from Their Points of View. Res dev med edu 4(2): 147-152.

- C Kamat, M Todakar, M Patil, A Teli (2022) Changing trends in assessment: Effectiveness of Direct observation of procedural skills (DOPS) as an assessment tool in anesthesiology postgraduate students. J Anaesthesiol Clin Pharmacol 38(2): 275-280.

- ME Khanghahi, FEF Azar (2018) Direct observation of procedural skills (DOPS) evaluation method: Systematic review of evidence. Medical journal of the Islamic Republic of Iran 32: 45.

- A C Loerwald, Felicitas Maria Lahner, Zineb M Nouns, Christoph Berendonk, John Norcini, et al. (2018) The educational impact of Mini-Clinical Evaluation Exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS) and its association with implementation: A systematic review and meta-analysis. PloS one 13(6): e0198009.

- A C Lörwald, F M Lahner, R Greif, C Berendonk, J Norcini, et al. (2018) Factors influencing the educational impact of Mini-CEX and DOPS: a qualitative synthesis. Medical teacher 40(4): 414-420.

- D Ouellet (2010) Benefit-risk assessment: the use of clinical utility index. Expert opinion on drug safety 9(2): 289-300.

- P Songkanha, P Chanprapaph, T Lertbunnaphong, O Supapueng, U Naimsiri (2023) The efficacy of postpartum tubal sterilization training program with minilaparotomy approach in Ob/Gyn residents. Heliyon 9(1): e12722.

- K Siau, James Crossley, Paul Dunckley, Gavin Johnson, Mark Feeney, et al. (2020) Colonoscopy Direct Observation of Procedural Skills Assessment Tool for Evaluating Competency Development During Training. Am J Gastroenterol 115(2): 234-243.

- K Siau, James Crossley, Paul Dunckley, Gavin Johnson, Mark Feeney, et al. (2020) Direct observation of procedural skills (DOPS) assessment in diagnostic gastroscopy: nationwide evidence of validity and competency development during training. Surgical endoscopy 34(1): 105-114.

- P Pantelidis, Michail Sideris, Georgios Tsoulfas, Efstratia Maria Georgopoulou, Ismini Tsagkaraki, et al. (2017) Is In-Vivo laparoscopic simulation learning a step forward in the Undergraduate Surgical Education?. Annals of Medicine and Surgery 16: 52-56.

- A Chuan, S Thillainathan, P L Graham, B Jolly, D M Wong, et al. (2016) Reliability of the Direct Observation of Procedural Skills assessment tool for ultrasound-guided regional anaesthesia. Anaesth Intensive Care 44(2): 201-209.

- CM Walsh (2016) In-training gastrointestinal endoscopy competency assessment tools: Types of tools, validation and impact. Best Practice & Research Clinical Gastroenterology 30(3): 357-374.

- J R Barton, S Corbett, CP van der Vleuten (2012) The validity and reliability of a Direct Observation of Procedural Skills assessment tool: assessing colonoscopic skills of senior endoscopists. Gastrointestinal Endoscopy 75(3): 591-597.

- A Stritzke, Prashanth Murthy, Elsa Fiedrich, Michael Andrew Assaad, Alexandra Howlett, et al. (2023) Advanced neonatal procedural skills: a simulation-based workshop: impact and skill decay. BMC Med Educ 23(1): 26.

- R Alborzi, J Koohpayehzadeh, M Rouzbahani (2021) Validity and reliability of the persian version of direct observation of procedural skills tool in audiology. The Scientific Journal of Rehabilitation Medicine 10(2): 346-357.

- K Siau, Rachel Levi, Marietta Iacucci, Lucy Howarth, Mark Feeney, et al. (2019) Paediatric Colonoscopy Direct Observation of Procedural Skills: Evidence of Validity and Competency Development. Journal of Pediatric Gastroenterology & Nutrition 69(1): 18-23.

- K M Moore (2018) Adaptation of Direct Observation of Procedural Skills (DOPS) for assessments in podiatry. FoHPE 19(1): 65.

- A Finall (2012) Trainers’ perceptions of the direct observation of practical skills assessment in histopathology training: a qualitative pilot study. J Clin Pathol 65(6): 538-540.

- K Siau, James Crossley, Paul Dunckley, Gavin Johnson, Adam Haycock, et al. (2019) Training and Assessment in Flexible Sigmoidoscopy: using a Novel Direct Observation of Procedural Skills (DOPS) Assessment Tool. JGLD 28(1): 33-40.

- K H Tsui, C Y Liu, J M Lui, S T Lee, R P Tan, et al. (2013) Direct observation of procedural skills to improve validity of students’ measurement of prostate volume in predicting treatment outcomes. Urological Science 24(3): 84-88.

- H S Heinzow, Hendrik Friederichs, Philipp Lenz, Andre Schmedt, Jan C Becker, et al. (2013) Teaching ultrasound in a curricular course according to certified EFSUMB standards during undergraduate medical education: a prospective study. BMC Med Educ 13(1): 84.

- L Jafri, Imran Siddiqui, Aysha Habib Khan, Muhammed Tariq, Muhammad Umer Naeem Effendi, et al. (2020) Fostering teaching-learning through workplace-based assessment in postgraduate chemical pathology residency program using virtual learning environment. BMC Med Educ 20(1): 383.

- L Ali, S Ali, N Orakzai, N Ali (2019) Effectiveness of Direct Observation of Procedural Skills (DOPS) in Postgraduate Training in Urology at Institute of Kidney Diseases, Peshawar. J Coll Physicians Surg Pak 29(6): 516-519.

- T Khaliq (2014) Effectiveness of direct observation of procedural skills (DOPS) as an assessment tool in surgery. Pakistan Armed Forces Medical Journal 64(4): 626-629.

- C Haines, R Dennick, J A P da Silva (2013) Developing a professional approach to work-based assessments in rheumatology. Best Practice & Research Clinical Rheumatology 27(2): 123-136.

- M RA McLeod, G J Mires, J S Ker (2011) The use of the Direct Observation of Procedural Skills (DOPS) assessment tool in the clinical setting-the perceptions of students. International Journal of Clinical Skills 5: 2.

- Saeed Sadiq Hamid, Tabassum Zehra, Muhammad Tariq, Azam Saeed Afzal, Hashir Majid, et al. (2022) Implementing work place-based assessment: the modified direct observation of procedural skills (DOPS) across medical specialties. An experience from a developing country. J Pak Med Assoc 72(4): 620-624.

- P Inamdar, P K Hota, M Undi (2022) Feasibility and Effectiveness of Direct Observation of Procedure Skills (DOPS) in General Surgery discipline: a Pilot Study. Indian J Surg 84(1): 109-114.

- C O Kara, E Mengi, F Tümkaya, B Topuz, F N Ardıç (2018) Direct observation of procedural skills in otorhinolaryngology training. Turkish archives of otorhinolaryngology 56(1): 7-14.

- J M Weimer, Maximilian Rink, Lukas Müller, Klaus Dirks, Carlotta Ille, et al. (2023) Development and Integration of DOPS as Formative Tests in Head and Neck Ultrasound Education: Proof of Concept Study for Exploration of Perceptions. Diagnostics 13(4): 661.

- J Lagoo, S Joshi (2021) Introduction of direct observation of procedural skills (DOPS) as a formative assessment tool during postgraduate training in anaesthesiology: Exploration of perceptions. Indian J Anaesth 65(3): 202-209.

- S Sethi, D K Badyal (2019) Clinical procedural skills assessment during internship in ophthalmology. Journal of Advances in Medical Education & Professionalism 7(2): 56-61.

- A C Lörwald, Felicitas Maria Lahner, Bettina Mooser, Martin Perrig, Matthias K Widmer, et al. (2019) Influences on the implementation of Mini-CEX and DOPS for postgraduate medical trainees’ learning: A grounded theory study. Medical Teacher 41(4): 448-456.

- S Rathod, R Kolte, N Gonde (2020) Assessment of suitability of direct observation of procedural skills among postgraduate students and faculty in periodontology and implantology. SRM J Res Dent Sci 11(4): 185.

- G Alfakhry, Khattab Mustafa, M Abdulhadi Alagha, Obada Zayegh, Hussam Milly, et al. (2022) Peer-assessment ability of trainees in clinical restorative dentistry: can it be fostered?. BDJ Open 8(1): 22.

- C S Liu, YM Wang, H N Lin (2020) An 8-year retrospective survey of assessment in postgraduate dental training in complicated tooth extraction competency. Journal of Dental Sciences (3): 891-898.

- G Singh, R Kaur, A Mahajan, A Thomas, T Singz (2017) Piloting direct observation of procedural skills in dental education in India. Int J App Basic Med Res 7(4): 239-242.

- M Azeem, J Iqbal, A U Haq, B MCPS (2019) The Effect of Applying DOPS on BDS Students’ Orthodontic Skills: A Quasi-Experimental Study. JPDA 28(1): 19.

- M Imanipour, M Jalili (2015) Evaluation of The Nursing Students’skills by Dops.

- M Jalili, M Imanipour, N D Nayeri, A Mirzazadeh (2015) Evaluation of the nursing students’ skills by DOPS. Journal of Medical Education 14(1):

- H Hengameh, R Afsaneh, K Morteza, M Hosein, S M Marjan, et al. (2015) The effect of applying direct observation of procedural skills (DOPS) on nursing students’ clinical skills: a randomized clinical trial. Global journal of health science 7(7): 17-21.

- N Roghieh, H Fateme, S Hamid, H Hamid (2013) The effect of formative evaluation using ‘direct observation of procedural skills’(DOPS) method on the extent of learning practical skills among nursing students in the ICU. Iranian journal of nursing and midwifery research 18(4): 290-293.

- S J Linedecker, J Barner, J Ridings Myhra, A Garza, D Lopez, et al. (2017) Development of a direct observation of procedural skills rubric for fourth-year pharmacy students in ambulatory care rotations. American Journal of Health-System Pharmacy 74;5_Supplement_1: 17-S23.

- S Sande (2017) Direct observation of procedural skills as an assessment tool for postgraduates in medical microbiology. International Journal of Health Sciences & Research 7(2): 56-62.

- V Bari (2010) Direct observation of procedural skills in radiology. American Journal of Roentgenology 195(1): W14-W18.

- B L Hoseini, S R Mazloum, F Jafarnejad, M Foroughipour (2013) Comparison of midwifery students’ satisfaction with direct observation of procedural skills and current methods in evaluation of procedural skills in Mashhad Nursing and Midwifery School. Iranian Journal of Nursing and Midwifery Research 18(2): 94-100.

We use cookies to ensure you get the best experience on our website.

We use cookies to ensure you get the best experience on our website.