Review Article

Creative Commons, CC-BY

Creative Commons, CC-BY

Implementing Artificial Intelligence in Health Care: Data and Algorithm Challenges and Policy Considerations

*Corresponding author: Ketan Paranjape, MS, MBA, PhD Student, Amsterdam UMC, Vrije Universiteit Amsterdam, The Netherlands.

Received: August 08, 2020; Published: January 08, 2021

DOI: 10.34297/AJBSR.2021.11.001645

Abstract

Artificial Intelligence (AI) is already driving fundamental changes in health care operations and patient care, and is showing promise to significantly advance the quadruple aim - enhance the patient experience, improve population health, reduce costs and improve the provider experience. However, along with these impressive advances and the realistic potential to transform health care in the near term come thorny questions about liability and accountability, algorithmic bias and representative data, and the ability to accurately interpret and explain data.

We address the challenges of implementing AI in routine health care practice by looking at the role of data and algorithms and the implications for medical malpractice. We then summarize ongoing efforts to create AI policy and regulation globally to address these challenges in order to enable the mainstreaming of AI in health care.

Introduction

Artificial intelligence (AI) in health care [1] is the use of a collection of computing technologies that perform perception, learning, reasoning, and decision-making tasks that are designed to augment human intelligence.

The goals of AI and its applications should be to advance the quadruple aim in health care [2,3] – enhance the patient experience, improve population health, reduce costs and improve the provider experience. AI in health care [4] can be used to augment the diagnostic process, automate image interpretation, improve clinical trial participation, reduce medication errors due to wrong dosage, detect insurance fraud, and improve the physician’s workflow by providing point of care learning, clinical documentation and quality-measurement reporting [5].

As we digitize health care [6], we must ensure AI augments and enhances human capabilities and interactions, with the goal of building trust and avoiding failures. For example, misclassification of a malignant tumor can be costly and dangerous, and a wrong diagnosis can have far-reaching consequences for a patient, such as having further investigations and interventions recommended or even being sent to hospice.

The responsibility of establishing guidelines and policies lies under the aegis of government and regulating bodies, ideally with input from key stakeholders that include physicians, administrators, public and private institutions and patients. Along with developing new and retooled laws and regulations, these bodies should design policies to encourage helpful innovation, generate and transfer expertise, and foster broad corporate and civic responsibility to address critical issues raised by AI technologies.

As AI systems become mainstream in health care, they are susceptible to errors and failures. In a recent study an AI system was trained to learn which patients with pneumonia had a higher risk of death. It inadvertently classified patients with asthma as being at lower risk. This was because the system failed to comprehend that people with pneumonia and a history of asthma were directly admitted into the hospital and received treatment that significantly reduced their risk of dying. The machine therefore interpreted this as someone with pneumonia and asthma having a lower risk of death [7].

Another potential obstacle to AI going mainstream is the ability to “bias [8] ” the data to cause misdiagnosis. Adversarial attacks are the ability to misclassify an output by engineering the inputs. Areas like dermatology, ophthalmology and radiology are susceptible as there are enormous incentives from which providers could benefit [9]. For example, per CMS guidelines, insurance companies have to pay for vitrectomy surgery for a confirmed diabetic retinopathy diagnosis. In order to reduce the number of procedures without making a change to the CMS policy, could an insurance company use adversarial noise to bias the positive images?

Ultimately, the impact on end-users depends on how they perceive, interpret and tolerate these shortcomings. As AI becomes more embedded in our daily lives, mistakes could have serious if not deadly consequences. Whether the mistake is an accident caused by a self-driving car [10] or a misdiagnosis of a health condition, AI systems are and will increasingly be put under heavy scrutiny.

In this paper, we will address some challenges of implementing AI in routine health care practice by looking at the role of data and algorithms and the implications for medical malpractice. We will then share ongoing efforts in creating AI policy and regulation to address these challenges in order to enable the mainstreaming/mass adoption of AI in health care.

Challenges for Artificial Intelligence in Health Care

AI is becoming an increasingly advanced, sophisticated, and meaningful field, and its uses and implications are far-reaching. For example, researchers at Stanford recently developed an algorithm that can detect pneumonia from chest x-rays at a level exceeding the ability of practicing radiologists [11]. Scientists at Google also developed a deep learning approach that could predict inpatient mortality, unexpected readmissions, and long length of stay more accurately than existing models by mining data from the electronic health records [12].

At the heart of AI’s value proposition is the ability to process vast amounts of data and then act on that data through algorithms using techniques such as machine learning and cognitive computing [13]. Harnessing the power of machines to process data much faster than any human being can, AI technologies have the potential to identify health care diagnoses, treatment plans and trends quickly by sifting through information and analyzing patient histories. These findings could support, inform, and enable physician decision-making.

Two factors that present challenges to implementing AI in health care are the role of algorithms and data and the potential ramifications for medical malpractice.

Role of Algorithms, Data

Computer algorithms are at the core of AI and are part of applications in education, financial services, health care, navigation, and manufacturing. These algorithms are being used to make health care decisions like prioritizing activities for staff members or triggering interventions for admitted patients-such as was reported at John Hopkins Hospital [14]. In these situations, how can one trust the algorithm to do the right thing, every time? Take the case of ‘Deep Patient’ where researchers at Mount Sinai Hospital applied deep learning to 700,000 patient records. Without expert guidance the tool was able to identify patterns and predict the onset of diseases such as cancer of the liver. The tool also predicted the onset of schizophrenia but offered no clue as to how it did so. For a condition that is very difficult to predict even by the most experienced psychiatrist, the way the AI system came up with its decision is known as the “black box” problem [15]. So how can we trust such a system?

Even more concerning, what are the consequences for the patient? If physicians tell their patients they are going to develop schizophrenia in the future and over time they do not develop it, what impacts does this have on the patients? This raises another ethical dilemma as well: when physicians realize they made a wrong diagnosis due to AI, do they have an obligation to tell their patients about it?

Decisions made by predictive algorithms can be obscure because of many factors, including technical (the algorithm may not lend itself to easy explanation), economic (the cost of providing transparency may be excessive, including the compromise of trade secrets), and social (revealing input may violate privacy expectations).

In considering the application of algorithms for individual patient treatment decisions, it should be noted that the physician will have to interpret the prediction model, as it is created by running the same algorithm on a mass scale. The physician will have to decide that the prediction score is reliable and accordingly propose a diagnosis.

Along with algorithms, AI relies heavily on patient data. To train a machine to identify specific conditions, hundreds of thousands of data elements are needed. For example, Google is using 46 billion data points to predict the medical outcomes of hospital patients [16]. The challenge here is that this need for data runs up against current models of patient privacy, consent, and control. Implementing AI in health care could violate HIPAA (Health Insurance Portability and Accountability Act) policies [17].

Although HIPAA does not specifically address technologies such as AI, HIPAA was put in place to protect individuals’ medical records and other personal health information. And there is a rapidly emerging move by consumers and patients to stop the “gold rush” mentality of industry to toss aside privacy and consent models [18]. Companies like Luna DNA and other blockchain formulations are empowering consumers and patients to share data [19], but retain control.

Patients are now understanding the importance of meaningful notice, consent, and control over their data, both HIPAA-covered data and data outside of HIPAA jurisdiction.

Implications for Medical Malpractice

As algorithms ingest large quantities of high-quality datasets from across the health care ecosystem, the use of AI will, over time, result in fewer misdiagnoses and errors[20]. The AI machine will be able to predict diagnosis based on complex relationships between the patient and expected treatment results without explicitly identifying or understanding those connections. We are now entering an era where the medical decision-making burden shifts from the physician to an algorithm. What happens when the physician pursues an improper treatment-based on an algorithm-that results in an error?

In the US, medical malpractice is a professional tort system that holds physicians liable when the care they provide to patients deviates from accepted standards so much as to constitute negligence or recklessness [21]. The system has evolved around the conception of the physician as the trusted expert, and presumes for the most part that the diagnosing or treating physician is entirely responsible for his or her decisions and thus accountable if the care provided is negligent or reckless. In England and Wales, medical liability is under the law of tort, specifically negligence [22]. It is general practice in cases of clinical negligence that National Health Service Trusts (NHS) and Health Authorities are liable and are the bodies that are sued, rather than individual clinicians. The NHS has provided guidance that it will accept full financial liability where negligent harm has occurred, and will not seek to recover costs from the health care professional involved [23].

Who is liable for erroneous care based on a decision made by a machine is a hard problem to solve. Future malpractice guidelines should incorporate such considerations by including health care professionals but also software companies that created the algorithm.

In 2018, IDx-DR became the first and only FDA-authorized AI system used for the autonomous detection of diabetic retinopathy using deep learning algorithms [24]. IDx, the company that developed the system, carries medical malpractice and liability insurance [25]. The autonomous diagnostic AI is responsible for performing within specification for on-label use of the device, while in an off-label situation, the liability for an erroneous treatment decision typically would be with the physician using it off-label.

As AI evolves rapidly, the adoption and diversity of applications in medical practice are outpacing the critical need of establishing standards and guidelines to protect the health care community. There is a need for key stakeholders from both the public and private sector to collaborate and recommend policy guidelines to enable the safe use of AI.

Ongoing Efforts in Creating AI Policy

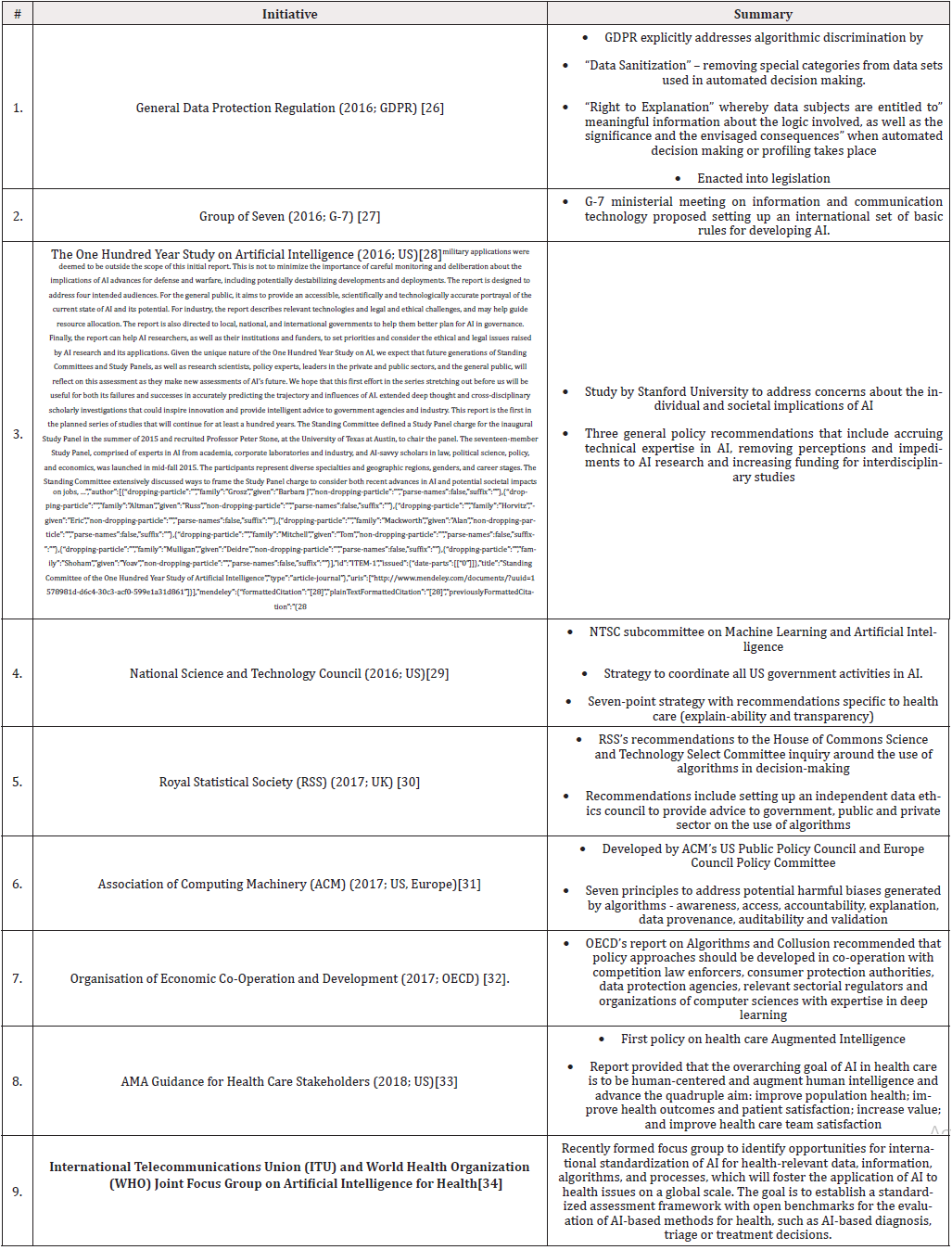

A systematic search revealed multiple initiatives underway in governments, academia, international consortiums, organizations and technology companies where policy guidelines and recommendations are being identified to address both the data and algorithm components of AI. Subject matter experts in these groups include members from science, engineering, economics, ethics, regulation and policy. While the majority of these recommendations do not directly address the health care industry, many could be applicable to the use of AI in health care.

In the following table we briefly summarize activities, in chronological order starting in 2016, that have gained significant momentum based on their impact and highlight if these are guidelines and recommendations to drive policy or enacted into legislation. We also call out if these recommendations apply to health care (Table 1).

General Data Protection Regulation (GDPR)

The GDPR [26], adopted by the European Parliament and Council in April 2016, is the first piece of EU legislature that explicitly addresses algorithmic discrimination. Recital 71 states a requirement to “implement technical and organizational measures that prevent, inter alia, discriminatory effects on natural persons based on racial or ethnic origin, political opinion, religion or beliefs, trade union membership, genetic or health status or sexual orientation, or that result in measures having such an effect.” These characteristics are referred to as “special categories”, and have their basis in non-discrimination legislation, such as Article 14 of the European Convention on Human Rights (EC, 2010).

Moving beyond recitals [35], GDPR addresses algorithmic discrimination by two key principles. The first, data sanitization, is the removal of special categories from datasets used in automated decision making. This principle is introduced by Article 9: Processing of special categories of personal data, which establishes a prima facie prohibition against “the processing of data revealing racial or ethnic origin” and other “special categories”. It is strengthened under Article 22: Automated individual decision-making, including profiling, which specifically prohibits “a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly significantly affects him or her” that is “based on the special categories of personal data referred to in Article 9” (art. 22(2)).

The second principle, algorithm transparency, introduces the “right to explanation” [30], whereby data subjects are entitled to “meaningful information about the logic involved, as well as the significance and the envisaged consequences” when automated decision making or profiling takes place (art. 13(2)(f); art. 14(2)(g)). In Article 12: Transparent information, communications and modalities for the exercise of the rights of the data subject, the GDPR further specifies that such information must be provided “in a concise, transparent, intelligible and easily accessible form, using clear and plain language.”

G-7

At the April 2016 Group of Seven (G-7) ministerial meeting on information and communication technology in Shikoku, Japan’s communication minister Sanae Takaichi proposed setting up an international set of basic rules for developing AI [27]. The guidelines will include AI programs to be designed to not pose dangers to human lives and physical safety, have emergency stops and correct actions in real-time, protect themselves against cyber-attacks so that people with malicious intentions cannot take control and be transparent to examine AI’s actions [36].

The One Hundred Year Study on Artificial Intelligence

In the fall of 2014 a long-term investigation of the field of AI and its influence on people, their communities, and society called “The One Hundred Year Study on Artificial Intelligence” was commissioned[28]. The study considered the science, engineering, and deployment of AI-enabled computing systems. The study panel reviewed AI’s progress in the recent years, envisioned the potential advances that lay ahead, and described the technical and societal challenges and opportunities the field raised in areas of ethics, economics, and the design of systems compatible with human cognition.

To help address the concerns about the individual and societal implications of rapidly evolving AI technologies, the study panel offers three general policy recommendations[28]. The first is to define a path toward accruing technical expertise in AI at all levels of government. Effective governance requires more experts who understand and can analyze the interactions between AI technologies, programmatic objectives, and overall societal values. The second focuses on removing the perceived and actual impediments to research on the fairness, security, privacy, and social impacts of AI systems. The third recommends increasing public and private funding for interdisciplinary studies of the societal impacts of AI.

National Science and Technology Council

To coordinate all federal activities in AI, in 2016 the United States government formed a National Science and Technology Council (NSTC) subcommittee on Machine Learning and Artificial Intelligence [37]. The NSTC then directed a subcommittee on Networking and Information Technology Research and Development (NITRD) to create a National Artificial Intelligence Research and Development Strategic Plan [38].

The plan laid out a seven-point strategy that included:

- Making long-term investments in AI research

- Developing effective methods for human-AI collaboration

- Understanding and addressing the ethical, legal, and societal implications of AI

- Ensuring the safety and security of AI systems

- Developing shared public datasets and environments for AI training and testing

- Measuring and evaluating AI technologies through standards and benchmarks

- Better understanding the national AI R&D workforce needs [29].

For health care, the recommendation was to improve explain-ability and transparency related to the use of AI algorithms, as they are based on deep learning and are opaque to users, with few existing mechanisms for explaining their results. From a liability perspective, physicians need to know why a decision was suggested and need explanations to justify a diagnosis or a course of treatment.

Royal Statistical Society (RSS)

In April 2017, the Royal Statistical Society (UK) [30] provided its recommendations to the House of Commons Science and Technology Select Committee inquiry for the use of algorithms in decision-making. The inquiry was focused on issues of governance, transparency, and fairness relating to algorithms.

The recommendations included the establishment of an independent Data Ethics Council which could provide impartial advice to the government, the private sector and the public on topics related to use of algorithms, allow existing laws (e.g., anti-discrimination) to manage issues arising due to the unfair use or results from using algorithms, develop professional standards for data science (including the incorporation of strong ethical training in data science courses), and making use of existing industry regulators to take on monitoring of outcomes from algorithms to check for bias.

Association of Computing Machinery (ACM)

In May 2017, the ACM US Public Policy Council and the ACM Europe Council Policy Committee issued a set of seven principles designed to address: (a) potential harmful biases generated by algorithms that are not transparent; and (b) biased input data used to train these algorithms[31]. These recommendations were not health care specific, but laid the foundation to provide context for what algorithms are, how they make decisions, and the technical challenges and opportunities to prevent and mitigate potential harmful bias.

The seven principles for algorithmic transparency and accountability focused on creating awareness of biases and potential harm, access and redress for individuals that are affected, accountability for using algorithms to make decisions, explanation of the procedures followed by the algorithm and decision made, data provenance, auditability of models, algorithms, data and decision and finally validation and testing of methods and results.

Organization for Economic Co-operation and Development (OECD)

In September 2017, OECD published a report on Algorithms and Collusion[32]. The paper addressed challenges algorithms present for both competition law enforcement and market regulation. It also reported on market regulation and how traditional tools might be used to tackle forms of algorithmic collusion. Given the multi-dimensional nature of algorithms, the report suggests that policy approaches should be developed in cooperation with competition law enforcers, consumer protection authorities, data protection agencies, relevant sectorial regulators and organizations of computer sciences with expertise in deep learning.

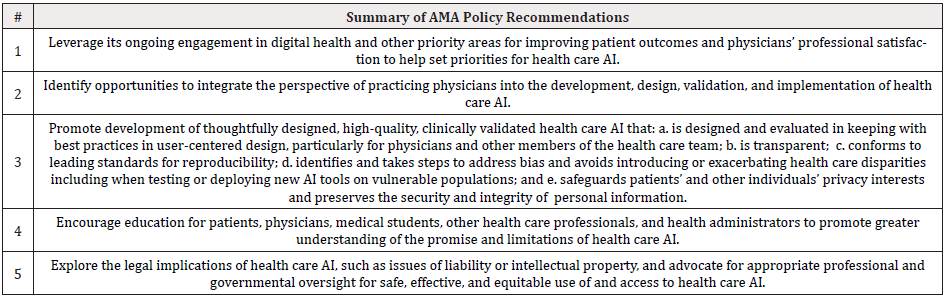

American Medical Association (AMA) Guidance for Health Care Stakeholders

In Jun 2018, the AMA’s House of Delegates comprised of proportional representations of every major national medical specialty society and state medical associations adopted its first policy on health care Augmented Intelligence[33]. The report accompanying the policy included a discussion of current generation AI systems that should augment clinical decision-making of physicians. It advanced that concept that physicians and machine working in combination improve clinical decision-making and patient health outcomes. In order to underscore the central role that humans must continue to play in health care even when enhanced with AI systems, the report utilized the term augmented intelligence instead of artificial intelligence. This reflected terminology that was utilized by others deploying AI systems in health care (such as IBM, Microsoft and Siemens). The policy report stated that the overarching goal of AI in health care is to be human-centered and augment human intelligence and advance the quadruple aim: improve population health; improve health outcomes and patient satisfaction; increase value; and improve health care team satisfaction (Table 2).

International Telecommunications Union (ITU) and World Health Organization (WHO) Joint Focus Group on Artificial Intelligence for Health

The International Telecommunication Union (ITU) has established a new Focus Group on “Artificial Intelligence for Health” (FG-AI4H) in partnership with the World Health Organization (WHO)[34]. FG-AI4H will identify opportunities for international standardization of AI for health-relevant data, information, algorithms, and processes, which will foster the application of AI to health issues on a global scale. In particular, it will establish a standardized assessment framework with open benchmarks for the evaluation of AI-based methods for health, such as AI-based diagnosis, triage or treatment decisions.

Summary and Conclusion

AI has the potential to drive valuable transformation in health and in the health care ecosystem. However, several concerns continue to impede the assimilation of AI into the mainstream of health care and multiple other fields. These concerns include algorithmic transparency, liability, accountability, algorithmic bias, representative data, interpretability and explainability.

Numerous governments, consortiums and academic or scientific groups have assembled expert stakeholders from multiple disciplines to work toward recommending action steps to alleviate these concerns. A critical component of these recommendations is making long-term investments in AI research along with understanding and addressing the ethical, legal and societal implications of AI. Additional steps include creating awareness of biases and potential harm along with explaining the procedures used by an algorithm and how a decision was made. Establishing independent councils to provide impartial advice to the government, the private sector and the public on topics related to use of algorithms will also help alleviate concerns with taking AI mainstream.

For health care professionals, the recommendations by the American Medical Association (AMA) may hold the most appeal, as they advance the concept that physicians and a machine working in combination have the greatest potential to improve clinical decision-making and patient health outcomes.

Finally, this field is still evolving and thus the entire industry may have to take a wait and see approach before settling on the right set of policies and regulations to mainstream AI in health care.

Conflict of Interest Statement

The authors have no conflicts to disclose.

References

- Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S, et al. (2017) Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol 2: 230-243.

- Berwick DM, Nolan TW, Whittington J (2008) The Triple Aim: Care, Health, and Cost. Health Aff 27(3): 759-769.

- Bodenheimer T, Sinsky C (2014) From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med 12(6): 573-576.

- Marr B (2018) How Is AI Used in Healthcare - 5 Powerful Real-World Examples That Show the Latest Advances.

- Andis Robeznieks (2019) 3 ways medical AI can improve workflow for physicians. American Medical Association.

- Stefan Biesdorf, Florian Niedermann (2014) Open interactive popup Healthcare’s digital future. McKinsey

- Caruana R, Lou Y, Gehrke J, Koch P, Sturm M, et al. (2015) Intelligible Models for HealthCare. Proc. 21st ACM SIGKDD Int. Conf. Knowl. Discov. Data Min. - KDD ’15, New York, New York, USA: ACM Press 1721-1730.

- Bias in artificial intelligence and the importance of independent data Brave New Coin.

- Finlayson SG, Chung HW, Kohane IS, Beam AL (2018) Adversarial Attacks Against Medical Deep Learning Systems. 363(6433): 1287-1289.

- Lambert F (2018) NTSB releases preliminary report on fatal Tesla crash on Autopilot - Electrek.

- Rajpurkar P, Irvin J, Zhu K, Yang B, Mehta H, et al. (2017) CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning.

- Rajkomar A, Oren E, Chen K, Dai AM, Hajaj N, et al. (2018) Scalable and accurate deep learning with electronic health records. Npj Digit Med 1: 18.

- Bini SA (2018) Artificial Intelligence, Machine Learning, Deep Learning, and Cognitive Computing: What Do These Terms Mean and How Will They Impact Health Care? J Arthroplasty. 33(8): 2358-2361.

- The Johns Hopkins Hospital Launches Capacity Command Center to Enhance Hospital Operations.

- The Dark Secret at the Heart of AI - MIT Technology Review.

- Google is using 46 billion data points to predict a hospital patient’s future -Quartz.

- (2017) Privacy | HHS.gov n.d. https://www.hhs.gov/hipaa/for-professionals/privacy/index.html

- Kogetsu A, Ogishima S, Kato K (2018) Authentication of Patients and Participants in Health Information Exchange and Consent for Medical Research: A Key Step for Privacy Protection, Respect for Autonomy, and Trustworthiness. Front Genet 9:167.

- Erickson S, (2018) Luna DNA Is About to Revolutionize Medicine -- and It’ll Pay You to Help -- The Motley.

- Thomas S, (2017) Artificial Intelligence, Medical Malpractice, and the End of Defensive Medicine | Bill of Health n.d.

- Medical Liability and Malpractice - State Laws and Legislation.

- Miller FH (1986) Medical malpractice litigation: do the British have a better remedy? Am J Law Med 11(4): 433-463.

- Briscoe G (2018) Medical Malpractice Liability: United Kingdom (England and Wales) | Law Library of Congress n.d.

- Abràmoff MD, Lavin PT, Birch M, Shah N, Folk JC (2018) Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. Npj Digit Med 1: 39.

- AskScience AMA Series: I’m Michael Abramoff, a physician/scientist, and Principal Investigator of the study that led the FDA to approve the first ever autonomous diagnostic AI, which makes a clinical decision without a human expert. AMA. : askscience n.d.

- (2018) EUR-Lex-32016R0679-EN-EUR-Lexn.d. https://eur-lex.europa.eu/legal-content/en/TXT/?uri=CELEX%3A32016R0679

- Kyodo (2017) Japan pushes for basic AI rules at G-7 tech meeting | The Japan Times n.d.

- Grosz BJ, Altman R, Horvitz E, Mackworth A, Mitchell T, et al. Standing Committee of the One Hundred Year Study of Artificial Intelligence.

- (2016) The National Artificial Intelligence Research and Development Strategic Plan n.d.

- The use of algorithms in decision making RSS evidence to the House of Commons Science and Technology Select Committee inquiry.

- Acm U (2017) Statement on Algorithmic Transparency and Accountability.

- ALGORITHMS AND COLLUSION Competition policy in the digital age.

- Chicago (2018) AMA Passes First Policy Recommendations on Augmented Intelligence | American Medical Association.

- Focus Group on & quot; Artificial Intelligence for Health & quot.

- Goodman BW. A Step Towards Accountable Algorithms? Algorithmic Discrimination and the European Union General Data Protection.

- (2016) G7 ICT Ministers’ Meeting in Takamatsu, Kagawa Joint Press Conference.

- NSTC Documents & Reports | The White House.

- (2018) The Networking and Information Technology Research and Development (NITRD) Program.

We use cookies to ensure you get the best experience on our website.

We use cookies to ensure you get the best experience on our website.