Review Article

Creative Commons, CC-BY

Creative Commons, CC-BY

Applications of ML and DL Algorithms in The Prediction, Diagnosis, and Prognosis of Alzheimer’s Disease

*Corresponding author: Fiza Saeed, University of Texas, 701 S Nedderman Dr, Arlington, Texas 76019, USA

Received: June 05, 2024; Published: June 10, 2024

DOI: 10.34297/AJBSR.2024.22.003014

Abstract

Early and accurate diagnosis of Alzheimer’s is vital for timely preventive and treatment outcomes. This review aims to summaries the most recent studies using machine learning and deep learning algorithms to assess Alzheimer’s progression, identify the early stages of AD, and discuss the direction of this research. This review discusses several applications of contemporary AI algorithms, such as Support Vector Machines, Logistic Regression, Random Forest, Convolutional Neural Network (CNN), and Recurrent Neural Network (RNN and Transfer Learning) for diagnosing AD. The benefits and drawbacks, as well as their effectiveness, are also examined. The main findings and medical imaging preprocessing methods used in the previous studies are outlined in the discussion. Lastly, we talk about the constraints and prospects for the future. Therefore, we stress that more data is needed, and more sophisticated neuroimaging technologies will be developed.

Keywords: Alzheimer’s disease, Artificial Intelligence, Neuroimaging, Neural Networks, MRI and CT

Introduction

Neurodegenerative diseases, characterized by the persistent degeneration of neurons [1], are commonly regarded as a collection of disorders that impair the functional abilities of the intensely and gradually affect the human nervous system [2]. Alzheimer’s disease (AD) stands out as a prevalent neurodegenerative disorder and the primary global public health concern [3]. AD is a type of cognitive impairment that is consistent and progressive, resulting in a delayed syndrome that affects cognitive abilities and daily functioning [3]. AD is a syndrome that causes memory loss and other significant mental functions to deteriorate over time [4]. An individual suffering from Alzheimer’s disease may initially experience mild confusion and memory loss [3]. A leading factor behind dementia in today’s era is Alzheimer’s disease. Alzheimer’s disease causes harm to the brain that impairs thinking, memory, and behavior by killing brain cells [5]. With advancing years comes an increased risk of Alzheimer’s [5]. Although there is currently no known cure or preventive measure for Alzheimer’s disease, there are several effective treatments that can slow down or stop the disease’s progression [2,4].

The incidence of AD is increasing in later life [5], with those over 65 being particularly at risk [6]. Globally, there are currently 44 million people suffering from AD dementia [7]. By 2050, there will likely be more than 100 million cases of AD if research advances in diagnosis and prevention fail to produce a solution [8]. The AD causes the brain’s degeneration to worsen over time [5]. As a result, according to the disease’s stage, patients with AD should be divided into various subgroups [8]. This division is essential because patients with AD must receive different treatments depending on the stage of the disease, and the same medication cannot be used [9]. Treatment benefits from classifying Alzheimer’s disease into distinct stages include symptom management and enhanced patient satisfaction [9].

The brains of AD patients now exhibit distinctive changes, such as modifications in the prodromal and pre-symptomatic states, due to tremendous advancements in neuroimaging technology [10]. This information helps medical professionals make a more precise diagnosis [9]. In clinical settings, positron emission tomography (PET), magnetic resonance imaging (MRI), and computed tomography (CT) are among the neuroimaging techniques used to diagnose Alzheimer’s disease [11]. Given the swift advancement and extensive utilization of artificial intelligence (AI) in the medical domain, neuroimaging-based computer-aided diagnosis (CAD) of AD could serve as a supplementary technique to support physicians [12].

Predefined preprocessing steps or suitable architectural design are required before applying machine learning algorithms [13]. Machine learning classification studies typically involve four primary steps: feature extraction, feature selection, dimensionality reduction, and selecting a feature-based classification algorithm [14]. These processes might take a while because they require several optimization stages and specific knowledge [15]. The reproducibility of these methods has improved [16]. CNNs, a component of deep learning (DL), have shown to be a successful technique for extracting features from neuro-images and for producing state-of-the-art (SOTA) results in a variety of image understanding and recognition tasks [17]. Additionally, several DL-based CAD techniques have been developed [11,12,17].

We thoroughly reviewed studies that employed machine learning and deep learning techniques on neuroimaging and neural data to predict the course of AD and detect the disease early. DL and ML studies on AD published between 2014 and Jan 2024 were found using PubMed and Google Scholar. The articles were analyzed and ranked according to neural data types and algorithms, and the results were compiled.

We also discuss the difficulties and consequences of using these techniques in AD research.

Neuroimaging Techniques

The most common neuroimaging techniques which have been utilized in the articles reviewed in this survey are:

A. Computed Tomography: CT is a structural imaging method that creates cross-sectional or three-dimensional (3D) images by integrating X-ray projections from various angles [18]. Its benefits include low cost and quick examination times [19]. However, because of the medial temporal lobe’s low resolution, MCI might be mistakenly identified as a symptom of normal ageing [20].

B. Positron Emission Tomography: By discerning the distribution of positron nuclide markers to gather metabolic data, PET is another structural imaging method that offers helpful data for diagnosing AD [21]. However, while MRI has the distinct benefit of not exposing patients to radiation, CT and PET exams expose patients to radiation [22].

C. Magnetic Resonance Imaging: MRI is a medical imaging technique that creates images of organs using electromagnetic signals extracted from the human body [23]. It can also create high-resolution 3D brain tissue images and is sensitive to brain contractions. As a result, using MRI in clinical practice to comprehend and diagnose AD is promising [24].

Methodology

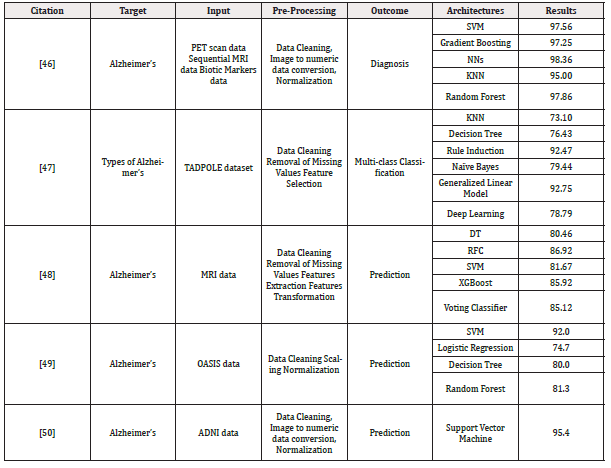

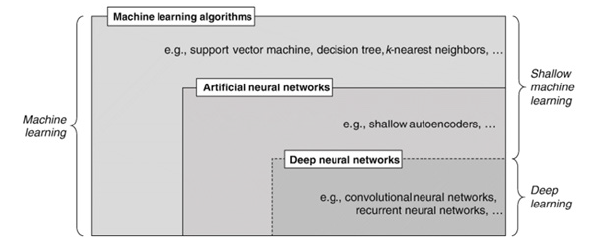

We conducted a comprehensive review of earlier research that employed DL and ML techniques to predict, diagnose and classify AD using multimodal neuroimaging and neural data. Figure 1 provides a detailed outline of the search strategy using the PRISMA flow diagram. From 269 searches on Google Scholar and PubMed, 16 articles were included in this review. We searched using the following keywords and yielded 269 results: Alzheimer’s disease, Alzheimer’s disease, deep learning, deep neural network, machine learning, SVM, KNN, CNN, Neuroimaging, MRI, and multimodal. Among the 269 relevant records, 109 overlapping records were removed (Figure 1).

Research Findings

The research findings from the reviewed articles are as follows:

Machine Learning Models

Computer programs or tools that use statistical models and algorithms to infer and recognize patterns come under machine learning (ML) [25]. Algorithms for machine learning automatically get better with experience [26]. In order to determine the output automatically, it searches for methods, trains models, and applies the acquired strategy [26]. Additionally, machine learning systems are capable of adapting to a changing environment. Machine learning algorithms automatically improve with time. It seeks out methodologies, trains models, and automatically employs the acquired strategy to determine the output [26]. Furthermore, machine learning systems can change with their surroundings.

The decision trees (DTs) offer a model that segments the data repeatedly based on feature cutoff values, forming a tree structure [27]. By dividing instances into subsets, splitting produces subsets. Leaves are called leaf nodes, and intermediate subsets are called internal nodes [28]. Because it does not suffer from overfitting, a random forest model outperforms a decision tree in terms of performance [29]. Random forest-based models are made up of different (DTs), each marginally different [29]. The ensemble predicts using the majority voting algorithm, considering each decision tree model separately (bagging) [30]. Consequently, each tree’s capacity for prediction is preserved while the level of overfitting is minimized [30].

Support vector machine uses appropriate hyperplanes within a multi-dimensional space to determine the class of data points [31]. A hyperplane divides cases of two categories of a variable that occupy adjacent vector clusters, one on one side and the other on the other, using Support Vector Machine (SVM) [32]. The vectors closest to the hyperplane are support vectors [32,33]. SVM uses both test and training data. Training data is divided into attributes and target values. Target values for test data can be predicted using a model created by SVM [32].

XGBoost is about applying decision trees boosted by gradients to get the best possible speed and efficiency [34]. Implementing gradient boosting models is usually time-consuming and needs more scalability due to the sequential nature of model training. Performance and speed are XGBoost’s main priorities [34]. Secondly, one of the easiest ways to combine the predictions from several earning algorithms is to vote [35]. Voting classifiers are like wrappers for multiple classifiers, which are evaluated and trained simultaneously to take advantage of their unique features rather than actual classifiers [36]. We can forecast the outcome after training data sets with various ensembles and algorithms.

Deep Learning

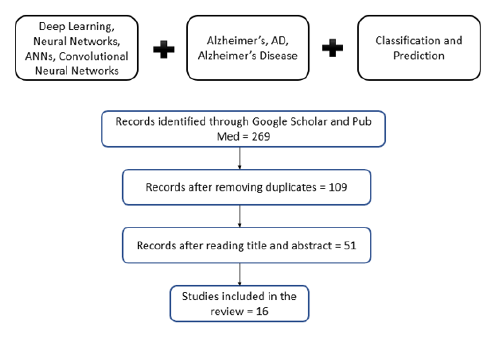

AAs a subset of ML, as shown in Figure 2, deep learning acquires features via a hierarchical learning procedure [37]. Deep learning techniques for prediction or classification have been used in several domains, such as natural language processing (NLP) and computer vision, which show significant performance advances. Since there have been many reviews of deep learning techniques recently, we will concentrate here on the fundamental ideas of Convolutional Neural Networks (CNNs), which are the foundation of deep learning [38]. We also discuss deep-learning architectural layouts for prognostic prediction and AD classification. Artificial neurons, or ANNs, are networks of interconnected neurons that were developed using Perceptron. The single-layer Perceptron’s demonstrated limitation in learning only linearly separable patterns prompted the discussion of practical error functions and gradient computation techniques outlined in these foundational publications. In addition, the gradient descent back-propagation technique was created and used to reduce the error function (Figure 2).

Deep Neural Networks: DNNs are computational models of numerous parallel, simple processing units (like neurons) layered into an interconnected structure [39]. In this case, the differential value becomes 0 before reaching the optimal value. When the sigmoid is differentiated, the maximum value is 0.25, and as it multiplies further, it approaches 0. This is called a “vanishing gradient issue,” a significant deep neural network barrier. The vanishing gradient problem has been the subject of extensive research [40]. One of the results of this effort is substituting multiple functions, including the hyperbolic tangent function, ReLu, and Softplus, for the activation function known as the sigmoid function.

The hyperbolic tangent function expands the sigmoid’s range of derivative values. The most popular activation function, ReLu, uses the value if it is >0 and replaces any values less than 0 with 0 [41]. It is possible to change the weights through the stacked hidden layers up to the first layer without going invisible, as the derivative increases to 1 when the value is greater than 0. This straightforward technique speeds up deep learning development and enables multiple layers’ construction [39]. When ReLu approaches zero, the Softplus function gradually descends in place of the ReLu function [41].

Although the weights are accurately calculated using a gradient descent method, this process typically takes a long time to complete because every update necessitates differentiating every piece of data. Therefore, to address speed and accuracy concerns, sophisticated gradient descent techniques have been developed in addition to the activation function [41]. For quicker and more frequent updates, Stochastic Gradient Descent (SGD), for instance, uses a subset randomly selected from the complete data. Adaptive Moment Estimation (Adam) is one of the most widely used gradient descent techniques nowadays [40].

Models of Deep Learning: In order to make the most of the data at hand and complete tasks like automatic prediction, classification, clustering, segmentation, and anomaly detection, among others, a variety of machine learning models are being developed. In order to train models for tasks like classification and obtain a consistent level of accuracy, labelled data is required. This study demonstrates the methodical evaluation of exciting fields of study and DL model applications in healthcare systems and medical diagnosis. The standard DL models, architectures, and associated benefits and drawbacks are discussed to shed light on their prospects.

The prognosis and diagnosis of life-threatening illnesses (such as brain tumours, lung cancer, and breast cancer), which are laborious and prone to error when done manually, have significantly benefited from the use of deep learning networks in medical image processing. These deep learning techniques are used to process medical images and solve various tasks, such as segmentation, prediction, and classification, more accurately than humans hope to. Still, certain shortcomings with the existing DL models motivate researchers to look for ways to improve them.

The history of deep learning has also been significantly impacted by overfitting, despite attempts to address it at the architectural level. The Deep Boltzmann Machine (DBM), a deeper structure, was created by stacking the RBMs. By taking data from each stacked layer, the Deep Belief Network (DBN) is a supervised learning technique that links unsupervised features [42]. One of the factors contributing to the rise in popularity of deep learning is DBN, which outperformed other models. DBN employs regression-based weight initialization to address the overfitting issue, and CNN efficiently reduces the model parameters by integrating convolution and pooling layers, resulting in decreased complexity [43]. The structures of CNN, DBM, Auto-Encoders (AE), sparse AE, and stacked AE are the most common [44]. Auto-Encoders (AEs) are an unsupervised learning technique that uses SGD and back-propagation to approximate the input value. AE initiates the dimensional reduction, but training is challenging because of the vanishing gradient problem. This problem has been resolved by sparse AE, which permits only a limited number of hidden units. Similar to DBN, stacked AE stacks sparse AE [45].

Deep learning techniques for diagnosing Alzheimer’s disease have included DNN, DBM, and Stacked AE. In order to categorize AD patients into mild cognitive impairment (MCI), the prodromal stage of the disease, or cognitively normal controls (CN), each method has been developed. Neuroimaging data is used to predict the conversion of MCI to AD using each method. This paper compares the applications of machine learning and deep learning in conjunction with conventional machine learning techniques, such as using SVM as a classifier and a “hybrid method.”

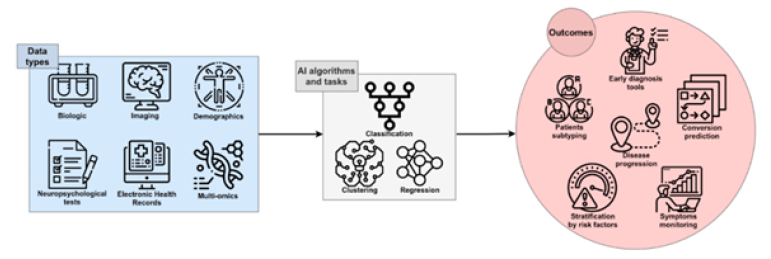

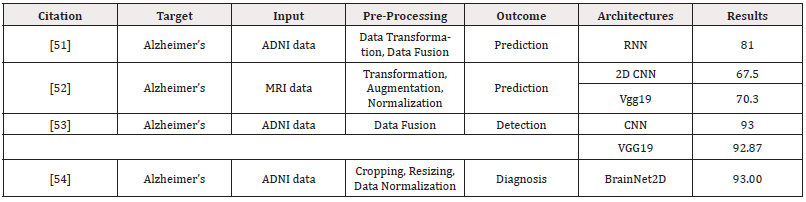

Deep Learning for Alzheimer’s Diagnosis and Prognosis: Two typical preprocessing procedures required to choose the best features from multimodal neuroimaging data for diagnostic classification are neuroimaging registration and feature extraction. These steps have a significant impact on the classification performance. Deep learning techniques, however, have been used to diagnose AD by classifying original neuroimaging data without the need for feature selection processes. Tables I and II summarize the articles that used machine learning and deep learning, respectively, for diagnosing and prognosis of Alzheimer’s disease (Figure 3) (Tables 1,2).

Discussion

Initiating an efficacious treatment regimen for AD requires a precise and efficient diagnosis. Timely detection of AD, in particular, is crucial for developing effective therapies and, eventually, for providing quality patient care. In order to diagnose and classify AD, we reviewed ML and DL techniques based on neuroimaging data in this work. We examined sixteen articles released between 2014 and 2024 and categorized them using machine learning, deep learning algorithms and different kinds of neuroimaging. Of the sixteen publications, four exclusively employed deep learning techniques, and the others employed a hybrid approach that combined deep learning with more conventional machine learning techniques to create a classifier.

Hybrid approaches have yielded an accuracy of up to 98.8% for AD classification and 83.7% for prediction of conversion from MCI to AD in a small amount of neuroimaging data available. Deep learning approaches have achieved an accuracy of up to 96.0% for AD classification and 84.2% for MCI conversion prediction. The amyloid PET scan, which contained pathophysiological information about AD, produced a 96% accuracy, while the SAE procedure produced the highest accuracy of 98.8%. It is concerning when experiments yield high accuracy with small amounts of data, mainly if the approach is prone to overfitting. When 3DCNN was applied from the MRI without the feature extraction step, the highest accuracy for the AD classification was 87% (Cheng, et al., 2017). It has been demonstrated that combining two or more multimodal neuroimaging data types yields higher accuracies than a single neuroimaging type.

Table 1: The summarized studies using machine learning and deep learning for AD’s prediction and detection.

Well-defined features influence performance outcomes in traditional machine learning. However, choosing the best features becomes more challenging the more complex the data. Deep learning automatically extracts the best features from the data (that is, without human assistance, the classifier trained by deep learning finds features that influence the diagnosis classification). Deep learning has become increasingly popular for medical image analysis due to its superior performance and ease of use. Since 2015, there have been many studies on AD using CNN, demonstrating superior performance in image recognition compared to other deep learning algorithms.

Current deep learning trends aim to analyze data more quickly and accurately than human analysts. In the well-known Google study for the diagnostic classification of diabetic retinopathy, classification performance surpassed that of a trained expert. Deep learning-based diagnostic classification must function consistently across various scenarios, and the predicted classifier must be comprehensible. As discussed below, several issues must be resolved before deep learning-based diagnostic classification and prognostic prediction are ready for clinical use.

Practitioners can adjust hyper-parameters like learning rates, batch sizes, weight decay, momentum, and dropout probabilities, as deep learning performance is sensitive to the random numbers generated at the beginning of training. Using the same random seeds across all levels is crucial for the same experimental result. Maintaining the same code bases is also crucial, even though our study did not, for the most part, include the hyper-parameters and random seeds. Replicating the study and getting the same results could be challenging due to the configuration’s uncertainty and the training procedure’s randomness.

To prevent overfitting and reproducibility problems, careful consideration at the architectural level is required when available neuroimaging data is limited. When a data set framework is improperly created, it can lead to data leakage in machine learning, wherein a model that uses extraneous information for classification is used. Any further MRI images should be identified as belonging to a patient with Alzheimer’s disease in the context of diagnostic classification for the progressive and irreversible form of the disease. The morphological characteristics of the patient’s brain, as opposed to the dementia biomarkers, significantly impact the classification choice once the training and testing sets share the patient’s brain structure. Articles that did not specifically explain how to prevent data leakage in the data set configurations were not included in the review process.

Key findings from deep learning ultimately need to be replicated in future research on completely different data sets. While this has gained widespread recognition in other fields, such as genetics, profound learning studies using neuroimaging data must be developed faster. Ideally, the growing open ecology of medical research data will offer a foundation for fixing this issue, particularly in the area of AD and related disorders.

Deep learning techniques depend entirely on heavy datasets to achieve the expected accuracy. In addition, hybrid methods show excellent facilitation when there is an issue with limited/restricted neuroimaging data. These processes are based on two principles: deep learning for feature extraction and machine learning for constructing a classifier for diagnosis. The reported results show superiority. Importantly, besides solving the data limitations they provide, the authors take the initiative to give an advanced recommendation to future researchers. AE is another method employed to decode the original image’s values. It ensures that the original image aligns with it and that this signal is fed into as an input. Testing the techniques used in a limited neuroimaging dataset enhances their effectiveness.

Conclusion

A literature review shows that machine learning and deep learning techniques are highly likely to be applied to Alzheimer’s disease diagnostics and prediction, thanks to the combination of approaches. This method in diagnostic algorithm analysis is booming due to its ability to identify the distinctive patterns of disease progression with the help of neuroimaging data. However, despite these challenges, the problems of overfitting, reproducibility, and interpretability have still arisen, leading to the necessity of paying attention to the architectural design and wide adoption of all datasets. Future research should focus on developing models that generalize well across different datasets and clarify the features extracted from the models, which can aid referrals to healthcare professionals.

Acknowledgement

None.

Conflict of interest

None.

References

- R Armstrong, P Richard, A Armstrong (2020) What causes neurodegenerative disease. Folia Neuropathol 58(2): 93-112.

- S Lasaponara, F Marson, F Doricchi, M Cavallo (2021) A Scoping Review of Cognitive Training in Neurodegenerative Diseases via Computerized and Virtual Reality Tools: What We Know So Far. Brain Sciences 11(5): 528.

- RI Mehta, JA Schneider (2021) What is ‘Alzheimer’s disease’? The neuropathological heterogeneity of clinically defined Alzheimer’s dementia. Curr Opin Neurol 34(2): 237-245.

- H Targa Dias Anastacio, N Matosin, L Ooi (2022) Neuronal hyperexcitability in Alzheimer’s disease: what are the drivers behind this aberrant phenotype. Translational Psychiatry 12(1): 257.

- T Ayodele, E Rogaeva, JT Kurup, G Beecham, C Reitz, et al. (2021) Early-Onset Alzheimer’s Disease: What Is Missing in Research. Curr Neurol Neurosci Rep 21(2): 4.

- Ying Xu, Louise Lavrencic, Kylie Radford, Andrew Booth, Sohei Yoshimura, et al. (2021) Systematic review of coexistent epileptic seizures and Alzheimer’s disease: Incidence and prevalence. J Am Geriatr 69(7): 2011-2020.

- AA Tahami Monfared, MJ Byrnes, LA White, Q Zhang (2022) Alzheimer’s Disease: Epidemiology and Clinical Progression. Neurol Ther 11(2): 553-569.

- Min Seok Baek, Han Kyeol Kim, Kyungdo Han, Hyuk Sung Kwon, Han Kyu Na, et al. (2022) Annual Trends in the Incidence and Prevalence of Alzheimer’s Disease in South Korea: A Nationwide Cohort Study. Front Neurol 13: 883549.

- SF Javaid, C Giebel, MA Khan, MJ Hashim (2021) Epidemiology of Alzheimer’s disease and other dementias: rising global burden and forecasted trends. F1000 Research 10: 425.

- RG de Souza, G dos Santos Lucas e Silva, WP dos Santos, ME de Lima (2021) Computer-aided diagnosis of Alzheimer’s disease by MRI analysis and evolutionary computing. Research on Biomedical Engineering 37(3): 455-483.

- V Sathiyamoorthi, AK Ilavarasi, K Murugeswari, S Thouheed Ahmed, B Aruna Devi, et al. (2021) A deep convolutional neural network-based computer aided diagnosis system for the prediction of Alzheimer’s disease in MRI images. Measurement 171: 108838.

- HM Zeng, HB Han, QF Zhang, H Bai (2021) Application of modern neuroimaging technology in the diagnosis and study of Alzheimer’s disease. Neural Regen Res 16(1): 73-79.

- Nicolai Franzmeier, Nikolaos Koutsouleris, Tammie Benzinger, Alison Goate, Celeste M Karch et al. (2020) Predicting sporadic Alzheimer’s disease progression via inherited Alzheimer’s disease-informed machine-learning. Alzheimer’s & Dementia 16(3): 501-511.

- Zhen Pang, Xiang Wang, Xulong Wang, Jun Qi, Zhong Zhao, et al. (2021) A Multi-modal Data Platform for Diagnosis and Prediction of Alzheimer’s Disease Using Machine Learning Methods. Mobile Networks and Applications 26(6): 2341-2352.

- C Kavitha, V Mani, SR Srividhya, OI Khalaf, CA Tavera Romero, et al. (2022) Early-Stage Alzheimer’s Disease Prediction Using Machine Learning Models. Front Public Health 10: 853294.

- S Grueso, R Viejo-Sobera (2021) Machine learning methods for predicting progression from mild cognitive impairment to Alzheimer’s disease dementia: a systematic review. Alzheimer’s Research & Therapy 13(1): 162.

- Tieme W P Janssen, Jennie K Grammer, Martin G Bleichner, Chiara Bulgarelli, Ido Davidesco, et al. (2021) Opportunities and Limitations of Mobile Neuroimaging Technologies in Educational Neuroscience. Mind Brain and Education 15(4): 354-370.

- Michaela Kavkova, Tomas Zikmund, Annu Kala, Jakub Salplachta, Josef Kaiser, et al. (2021) Contrast enhanced X-ray computed tomography imaging of amyloid plaques in Alzheimer disease rat model on lab based micro-CT system. Scientific Reports 11(1): 5999.

- Francisco Morales-Zavala, Pedro Jara-Guajardo, David Chamorro, Ana L Riveros, America Chandia-Cristi et al. (2021) In vivo micro computed tomography detection and decrease in amyloid load by using multifunctionalized gold nanorods: a neurotheranostic platform for Alzheimer’s disease. Biomater Sci 9(11): 4178-4190.

- Yanyan Kong, Shibo Zhang, Lin Huang, Chencheng Zhang, Fang Xie, et al. (2021) Positron Emission Computed Tomography Imaging of Synaptic Vesicle Glycoprotein 2A in Alzheimer’s Disease. Front Aging Neurosci 13: 731114.

- F Gao (2021) Integrated Positron Emission Tomography/Magnetic Resonance Imaging in clinical diagnosis of Alzheimer’s disease. Eur J Radiol 145: 110017.

- Antoine Leuzy, Ruben Smith, Nicholas C Cullen, Olof Strandberg, Jacob W Vogel, et al. (2022) Biomarker-Based Prediction of Longitudinal Tau Positron Emission Tomography in Alzheimer Disease. JAMA Neurol 79(2): 149-158.

- D AlSaeed, SF Omar (2022) Brain MRI Analysis for Alzheimer’s Disease Diagnosis Using CNN-Based Feature Extraction and Machine Learning. Sensors 2020 22(8): 2911.

- N Yamanakkanavar, JY Choi, B Lee (2020) MRI Segmentation and Classification of Human Brain Using Deep Learning for Diagnosis of Alzheimer’s Disease: A Survey. Sensors 2020 20(11): 3243.

- D Dhall, R Kaur, M Juneja (2020) Machine Learning: A Review of the Algorithms and Its Applications. Lecture Notes in Electrical Engineering 597: 47-63.

- B Mahesh (2018) Machine Learning Algorithms-A Review. International Journal of Science and Research.

- Jie Kuang, Pin Zhang, TianPan Cai, ZiXuan Zou, Li Li, et al. (2021) Prediction of transition from mild cognitive impairment to Alzheimer’s disease based on a logistic regression-artificial neural network-decision tree model. Geriatr Gerontol Int 21(1): 43-47.

- C Kavitha, V Mani, SR Srividhya, OI Khalaf, CA Tavera Romero, et al. (2022) Early-Stage Alzheimer’s Disease Prediction Using Machine Learning Models. Front Public Health 10: 853294.

- RA Saputra, C Agustina, D Puspitasari, R Ramanda, Warjiyono, et al. (2020) Detecting Alzheimer’s Disease by The Decision Tree Methods Based on Particle Swarm Optimization. J Phys Conf Ser 1641(1): 012025.

- V Karami, G Nittari, E Traini, F Amenta (2021) An Optimized Decision Tree with Genetic Algorithm Rule-Based Approach to Reveal the Brain’s Changes During Alzheimer’s Disease Dementia. Journal of Alzheimer’s Disease 84(4): 1577-1584.

- J Cervantes, F Garcia-Lamont, L Rodríguez-Mazahua, A Lopez (2020) A comprehensive survey on support vector machine classification: Applications, challenges and trends. Neurocomputing 408: 189-215.

- G Battineni, N Chintalapudi, F Amenta (2019) Machine learning in medicine: Performance calculation of dementia prediction by support vector machines (SVM). Inform Med Unlocked 16: 100200.

- A Retico, P Bosco, P Cerello, E Fiorina, A Chincarini, et al. (2015) Predictive Models Based on Support Vector Machines: Whole-Brain versus Regional Analysis of Structural MRI in Alzheimer’s Disease. Journal of Neuroimaging 25(4): 552-563.

- I Mahmood Ibrahim, A Mohsin Abdulazeez (2021) The Role of Machine Learning Algorithms for Diagnosing Diseases. Journal of Applied Science and Technology Trends 2(1): 10-19.

- Z Mushtaq, MF Ramzan, S Ali, S Baseer, A Samad, et al. (2022) Voting Classification-Based Diabetes Mellitus Prediction Using Hypertuned Machine-Learning Techniques. Mobile Information Systems.

- I Javid, A Khalaf Zager, A Khalaf, Z Alsaedi, R Ghazali (2020) Enhanced Accuracy of Heart Disease Prediction using Machine Learning and Recurrent Neural Networks Ensemble Majority Voting Method. Article in International Journal of Advanced Computer Science and Applications 11(3).

- N Goenka, S Tiwari (2021) Deep learning for Alzheimer prediction using brain biomarkers. Artif Intell Rev 54(7): 4827-4871.

- DA Otchere, TO Arbi Ganat, R Gholami, S Ridha (2021) Application of supervised machine learning paradigms in the prediction of petroleum reservoir properties: Comparative analysis of ANN and SVM models. J Pet Sci Eng 200: 108182.

- H Cheng, M Zhang, JQ Shi (2023) A Survey on Deep Neural Network Pruning-Taxonomy, Comparison, Analysis, and Recommendations.

- SR Dubey, SK Singh, BB Chaudhuri (2022) Activation functions in deep learning: A comprehensive survey and benchmark. Neurocomputing 503: 92-108.

- T Szandała (2021) Review and Comparison of Commonly Used Activation Functions for Deep Neural Networks. Studies in Computational Intelligence 903: 203-224.

- D Alberici, P Contucci, E Mingione (2021) Deep Boltzmann Machines: Rigorous Results at Arbitrary Depth. Ann Henri Poincare 22(8): 2619-2642.

- Y Nomura, N Yoshioka, F Nori (2021) Purifying Deep Boltzmann Machines for Thermal Quantum States. Phys Rev Lett 127(6): 060601.

- M Sewak, SK Sahay, H Rathore (2020) An overview of deep learning architecture of deep neural networks and autoencoders. J Comput Theor Nanosci 17(1): 182-188.

- P Li, Y Pei, J Li (2023) A comprehensive survey on design and application of autoencoder in deep learning. Appl Soft Comput 138: 110176.

- P Lodha, A Talele, K Degaonkar (2018) Diagnosis of Alzheimer’s Disease Using Machine Learning. Proceedings - 2018 4th International Conference on Computing Communication Control and Automation (ICCUBEA).

- M Shahbaz, S Ali, A Guergachi, A Niazi, A Umer, et al. (2019) Classification of Alzheimer’s Disease using Machine Learning Techniques.

- C Kavitha, V Mani, SR Srividhya, OI Khalaf, CA Tavera Romero, et al. (2022) Early-Stage Alzheimer’s Disease Prediction Using Machine Learning Models. Front Public Health 10: 853294.

- MB Antor, Sultan Aljahdali, Manjit Kaur, Parminder Singh, Mehedi Masud, et al. (2021) A Comparative Analysis of Machine Learning Algorithms to Predict Alzheimer’s Disease.

- M Sudharsan, G Thailambal (2023) Alzheimer’s disease prediction using machine learning techniques and principal component analysis (PCA). Mater Today Proc 81(2): 182-190.

- Garam Lee, Kwangsik Nho, Byungkon Kang, Kyung Ah Sohn, Dokyoon Kim, et al. (2019) Predicting Alzheimer’s disease progression using multi-modal deep learning approach. Scientific Reports 9(1):1952.

- E Mggdadi, A Al Aiad, MS Al Ayyad, A Darabseh (2021) Prediction Alzheimer’s disease from MRI images using deep learning. 2021 12th International Conference on Information and Communication Systems 120-125.

- J Venugopalan, L Tong, HR Hassanzadeh, MD Wang (2021) Multimodal deep learning models for early detection of Alzheimer’s disease stage. Scientific Reports 2021 11(1): 3254.

- Cristina L Saratxaga, Iratxe Moya, Artzai Picón, Marina Acosta, Estibaliz Garrote, et al. (2021) Mri deep learning-based solution for alzheimer’s disease prediction. J Pers Med 11(9): 902.

We use cookies to ensure you get the best experience on our website.

We use cookies to ensure you get the best experience on our website.